Assessment |

Biopsychology |

Comparative |

Cognitive |

Developmental |

Language |

Individual differences |

Personality |

Philosophy |

Social |

Methods |

Statistics |

Clinical |

Educational |

Industrial |

Professional items |

World psychology |

Cognitive Psychology: Attention · Decision making · Learning · Judgement · Memory · Motivation · Perception · Reasoning · Thinking - Cognitive processes Cognition - Outline Index

Situation awareness or situational awareness (SA) is a function of the human mind in complex, dynamic and/or high-risk settings. In terms of cognitive psychology, SA refers to a decision-maker's dynamic mental model of his or her evolving task situation. It is the awareness and understanding of the operational environment and other situation-specific factors affecting current and future goals, its purpose being to enable rapid and appropriate decisions and effective actions. Having complete, accurate and up-to-the-minute SA is considered to be essential for those who are responsible for being in control of complex, dynamic systems and high-risk situations, such as combat pilots, air traffic controllers, emergency responders, surgical teams, military commanders and the like. Stated simply, having SA means "knowing what is going on so you can figure out what to do.” Lacking SA or having inadequate SA has consistently been identified as one of the primary factors in accidents attributed to human error. Maintaining good SA involves the acquisition, representation, interpretation and utilization of information in order to anticipate future developments, make intellligent decisions and stay in control.

SA is now a key concept in human factors research, aviation, command and control, and indeed in any domain where the effects of ever-increasing technological and situational complexity on the human decision-maker are a concern.

Origins[]

Although the term is fairly recent, the concept itself appears to go back a long way in the history of military thinking - it is recognisable in Sun Tzu's Art of War, for instance.

Before being widely adopted by human factors scientists in the 1990s, the term was first used by U.S. Air Force (USAF) fighter aircrew returning from war in Korea and Vietnam (see Watts, 2004). They identified having good SA as the decisive factor in air-to-air combat engagements - the "ace factor" (Spick, 1989). Survival in a dogfight was typically a matter of observing the opponent's current move and anticipating his next move a fraction of a second before he could observe and anticipate one's own. USAF pilots also came to equate SA with the "observe" and "orient" phases of the famous observe-orient-decide-act loop (OODA Loop) or Boyd cycle, as described by the USAF fighter ace and war theorist Col. John Boyd. In combat, the winning strategy is to "get inside" your opponent’s OODA loop, not just by making your own decisions quicker but also by having better SA than the opponent, and even changing the situation in ways that the opponent cannot monitor or even comprehend. Losing one's own SA, in contrast, equates to being "out of the loop".

Definitions and models[]

Stated in lay terms, SA is simply “knowing what is going on so you can figure out what to do” (Adam, 1993). It is also “what you need to know not to be surprised” (Jeannot et al., 2003). Intuitively it is one's answers (or ability to give answers) to such questions as: What is happening? Why is it happening? What will happen next? What can I do about it?

Consistent with such notions, a variety of formal definitions of SA have been suggested:

- "the perception of elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future." (Endsley, 1988, 1995a, 2000)

- “all knowledge that is accessible and can be integrated into a coherent picture, when required, to assess and cope with a situation” (Sarter and Woods, 1991)

- "the combining of new information with existing knowledge in working memory and the development of a composite picture of the situation along with projections of future status and subsequent decisions as to appropriate courses of action to take" (Fracker 1991)

- "the continuous extraction of environmental information along with integration of this information with previous knowledge to form a coherent mental picture, and the end use of that mental picture in directing further perception and anticipating future need" (Dominguez et al. 1994)

- "adaptive, externally-directed consciousness that has as its products knowledge about a dynamic task environment and directed action within that environment” (Smith & Hancock, 1995)

Endsley's model[]

The established and most popular definition of SA is that provided by US human factors researcher Mica Endsley (1988, 1995a):

"Situation awareness is the perception of elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future."

(An aside: Endsley has always used the term situation awareness, but many others use the term situational awareness. Even though Endsley's is widely taken to be the formal definition, the latter version of the term appears to be used about twice as often, going by GoogleFight results.)

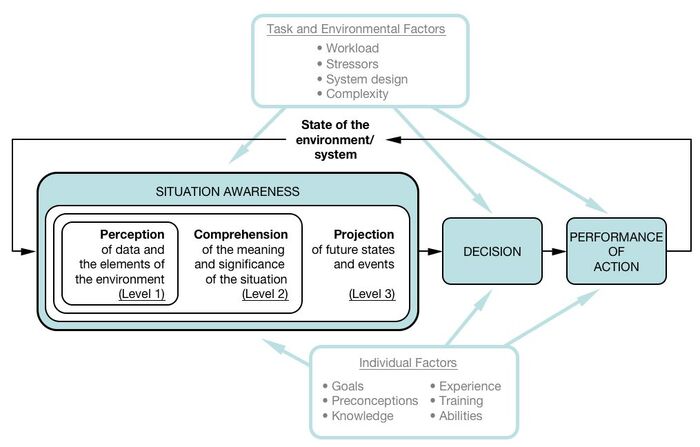

Perception, comprehension and projection are, in Endsley's account, the three essential components of SA. They support the active maintenance of an integrated mental model at three hierarchic levels:

- Perception involves monitoring, cue detection and simple recognition; it produces Level 1 SA, the most basic level of SA, which is an awareness of multiple situational elements (objects, events, people, systems, environmental factors) and their current states (locations, conditions, modes, actions).

- Comprehension involves pattern recognition, interpretation and evaluation; it produces Level 2 SA, an understanding of the overall meaning of the perceived elements - how they fit together as a whole, what kind of situation it is, what it means in terms of one's mission goals.

- Projection involves anticipation and mental simulation; it produces Level 3 SA, an awareness of the likely evolution of the situation, its possible/probable future states and events. This is the highest level of SA.

Endsley (1995a) also makes a distinction between situation awareness, "a state of knowledge," and situation assessment, "the processes used to achieve that knowledge." The terms perception, comprehension and projection, however, might be read either way - as components of a state of knowledge (e.g., perception is knowledge based on observation) or as sub-processes used to achieve that state (e.g., perception is the process of detecting and recognizing stimuli). Either way, whatever processes happen to be involved in the production of a situational mental model, SA is the knowledge content of that mental model.

Endsley & Jones (1997) draw a parallel between Endsley's three levels of SA and the “cognitive hierarchy” of data–information–knowledge–understanding as suggested by Cooper (1995):

Data correlated becomes information. Information converted into situational awareness becomes knowledge. Knowledge used to predict the consequences of actions leads to understanding.

Endsley and Jones suggest that “knowledge” in this sense equates to level 1 SA and “understanding” equates to levels 2 and 3 SA.

Note that while Endsley describes a hierarchy of levels of SA founded on the perception of environmental cues and raw data, she insists that SA is not entirely data-driven. Rather, the processes used to maintain SA alternate between data-driven (bottom-up) and goal-driven (top-down) processes (Endsley, 2000). In addition, SA is not only produced by the processes of situation assessment, it also drives those same processes in a recurrent fashion. For example, one’s current awareness can determine what one pays attention to next and how one interprets the information perceived (Endsley, 2000).

Although widely used, it is fair to say that this dominant theory is not immune from criticism. Some more disingenuous commentators query whether it is one of many other three level models of cognition that have been in existence for some time previously but under a different name(e.g. Card, Moran and Newell's model information processor). Additionally, some commentators (e.g. Smith & Hancock, 1995) question the theory's reliance on the concept of mental models, a concept that is itself ill defined and subject to argument. Nonetheless, this definition is familiar and extremely popular, to the extent that the notion of SA has become almost synonymous with it. Where SA comes from, how it is structured and what people do with it is somewhat more difficult to get to grips with (see Controversies below).

SA and Sensemaking[]

Somewhat confusingly, a slightly different use of the term SA has evolved in the military command and control (C2) arena. In this case, the term situational awareness is applied to just knowing about the physical elements in the environment (equivalent to Endsley’s level 1 SA), while all the rest (equating to levels 2 and 3 as described by Endsley) is referred to as situational understanding. You might see, for example, articles saying that it is important for warfighters to go “beyond situational awareness and achieve situational understanding” (e.g., Marsh, 2000). The processes involved in arriving at and maintaining situational understanding in C2 are termed sensemaking (e.g., Gartska & Alberts, 2004).

These different uses of terminology are rarely made explicit. But why have they come about in the first place? The reasons may be as follows: Most of Endsley’s work has concentrated on individual SA at the tactical level, where the immediate physical environment changes from one moment to the next. The individual's goal is typically to assess the perceived situation in order to project where different physical elements will be in the next moment. C2 theorists, in contrast, have focused on how shared SA (see below) is developed for battlespace command & control at the operational level, where the broader military situation is far more complex, far more uncertain, and evolves over hours and days. The aim here is to collectively make sense of the enemy’s intentions and capabilities, as well as the (often unintended) effects of one’s own actions on a complex system of systems.

[]

Generally, people work not as isolated individuals but as members of teams and organizations, and it is sometimes important or necessary to shift the focus of human-factors analysis from the individual decision-maker or actor to the whole team. This implies a shift of focus from individual SA to team SA and/or shared SA.

There is currently a lot of emphasis on the need for shared SA, especially in the context of distributed sensemaking and virtual teams. The terms "team SA" and "shared SA" (or "shared awareness and understanding") are sometimes used interchangably, but a distinction has been made in the literature, notably by Endsley. This distinction is best understood in relation to goals. A team is not just an arbitrary group of people but a group with a specific purpose such that the individual members are working towards a common goal. As defined by Salas et al (1992), a team is:

"a distinguishable set of two or more people who interact dynamically, interdependently and adaptively toward a common and valued goal/objective/mission, who have each been assigned specific roles or functions to perform, and who have a limited life span of membership."

Thus each team member has an overall goal (purpose) that is shared with all other team members plus one or more personal subgoals (functions, tasks). Some personal subgoals may overlap between multiple team members, while others may be unique to specific roles. Associated with each goal or subgoal are SA requirements (i.e., items that need to be monitored and understood on an ongoing basis if the goal is to be successfully accomplished), and where subgoals are shared then so too are SA requirements; but insofar as subgoals differ then SA requirements will likewise differ. Typically, then, some of the SA requirement in teams is shared between multiple team members while some of it is non-shared or distributed. Artman and Garbis (1988) thus defined team SA as:

Two or more agents’ active construction of a situation model which is partly shared and partly distributed and from which they can anticipate important future states in the near future.

Overall team SA can then be conceived of as the degree to which all team members possess the SA required for their collective responsibilities, both shared and distributed, while shared SA can be defined as "the degree to which team members possess the same SA on shared SA requirements" (Endsley, 1995).

Shu & Furuta (2005) also note that the term shared SA should not be taken to imply some kind of united “group mind”, since a team consists of only individuals and shared SA arises only in the cooperative interaction of their individual minds. Team members therefore need to be able to monitor, understand and anticipate the SA needs and information requirements of their colleagues in order to adjust their own actions accordingly. Hence Shu & Furuta define team SA as not just the the sum of shared SA and distributed SA in a team but also including the mutual awareness of one another’s minds as they interact as a team.

Measurement of SA[]

Since around 1990, dozens of techniques for measuring situational awareness in individuals and teams have been developed. The variety of techniques can be classified in several ways. Typcially, measures of SA are divided into three broad categories:

- Explicit measures are those which seek to capture how people actually perceive and understand the key elements of the situation; they involve the use of “probes” or questions designed to prompt subjects to self-report their actual SA.

- Implicit measures are those in which the state of subjects’ SA is inferred from indirect evidence, such as their:

- task performance

- communications behaviour

- physiological activity

- Subjective measures are those which use ratings of (perceived) SA given by one of the following:

- the subject himself (self-ratings)

- a fellow team-member (peer-ratings)

- an expert observer (observer-ratings)

Explicit measures of SA[]

Explicit measures offer the most direct approach to SA measurement. The best-known (and most-used) technique is Endsley’s (1995b) SAGAT (Situation Awareness Global Assessment Technique), in which at certain intervals the task or simulation is temporarily frozen and subjects are presented a set of predetermined multiple-choice questions or “probes” about the situation. Probe techniques have a high degree of validity but pre-trial preparation and post-trial data analysis can be very labour-intensive. The development of appropriate probe questions for a given task and scenario requires a prior analysis of the subject’s SA requirements, which is a kind of cognitive task analysis identifying the various elements of perception, comprehension and projection that the subject needs in order to achieve his or her task goals. Subjects’ responses to such probes are then scored against records of ground truth (items that are a matter of interpretation may be scored by subject matter experts).

Alternative probe techniques include the use of open questions embedded as verbal communications during the task (known as “real-time probes”). This method is less intrusive and more “naturalistic” than artificially interrupting and freezing the task (Jones & Endsley, 2000). Another technique is to periodically ask the subject to provide a situation report.

Implicit measures of SA[]

Implicit measures of SA may be derived by analysing certain types of objective data.

Communications analysis takes the subject’s information reports and information requests during the performance of a task as indirect evidence of SA or the lack of it. Tracking information reports and requests across a group or network can also provide evidence of sensemaking processes and the spread of shared SA. The WESTT (Workload, Error, SA, Teamwork and Time) tool uses communication analysis and social network analysis to derive indirect measures of SA (Baber et al, 2004).

Performance analysis can provide some evidence of what the subject does or does not know. For example, a subject who hits many targets is presumably demonstrating awareness of those targets. Performance-based measures can also involve some artificial intervention in the task: the time it takes a subject to react to a potentially critical event or “scenario injection” can be taken to be a sign of when (and if) he first became aware of it. The link between SA and performance is problematic, however, since successful performance is not necessarily a sign of good SA, and erroneous SA need not always lead to erroneous performance (Baxter & Bass, 1998). Moreover, good SA on the assessed task or sub-task may have been developed at the expense of low SA on some other task (Endsley, 1995b).

Psychophysiological data such as EEG and EOG response patterns may be correlated with particular states or changes in state of SA (e.g., French et al, 2007).

Subjective measures of SA[]

Subjective techniques vary in their level of complexity and refinement. Some consist of just a single, overall SA rating (e.g., 1-7) while others use multiple scales which represent a breakdown of SA into a number of different key facets or aspects of interest. SART (the Situation Awareness Rating Technique) has 10 rating scales derived from military pilots’ personal constructs of SA and the factors affecting it (Taylor, 1989). Some techniques are fairly generic and could be presented to any kind of operator or decision-maker, while others are designed to reflect the unique SA requirements of a specific task or role. Finally, techniques differ in terms of who is asked to provide subjective ratings:

- Self-ratings are given by subjects themselves to describe their own SA (e.g., Taylor. 1989).

- Observer-ratings are given by someone outside the task (usually a subject-matter expert) observing the subjects’ performance (e.g., Bell & Lyon, 2000).

- Peer-ratings are used to evaluate SA in teams: one team member gives his subjective rating of another team member’s SA and vice versa (e.g., Carretta et al, 1996).

Self-ratings of SA are relatively easy to implement and can even be employed in actual task environments, such as in flight (Metalis, 1993). For example, PSAQ (Participant SA Questionnaire) is a self-rating technique which simply asks for a rating of one’s overall SA on a scale of 1-10. Multiple ratings can often be taken during a task, which is useful for comparison across time and different conditions, but only relative differences can be compared across different subjects since a rating of “4” given by one rater may not mean the same thing as a rating of “4” given by another (Fracker, 1991).

A subjective rating of SA represents how that rater currently perceives his own (or someone else’s) SA. Of course, when self-ratings are given during a task, the rater at that moment may be unaware of some important aspects of the situation (the “unknown unknowns”); hence self-raters can significantly overestimate their actual SA (e.g., Foy & McGuinness, 2000). Conversely, observers cannot see inside the minds of performing subjects and their observer-ratings, based purely on observable behaviours, may underestimate how much the subject actually knows (Endsley, 1995b). It is useful, then, to distinguish between actual SA quality and perceived SA quality. As a rule, explicit probe techniques measure the actual quality of SA while subjective techniques measure the perceived quality of SA, or confidence in SA (McGuinness, 2004). For this reason, some researchers have criticized subjective ratings as lacking validity and reject their use on this basis. Knowing how SA is perceived can sometimes be just as important as measuring actual SA, however, since errors in perceived SA quality (over-confidence or under-confidence in SA) may have just as harmful an effect on individuals’ or teams’ decision-making as errors in their actual SA (Endsley, 1998).

Which technique?[]

Measurement techniques vary in their degree of intrusiveness and the extent to which they artificially manipulate or intervene in (and so potentially disrupt) subjects’ normal task performance. The least intrusive techniques are those which infer SA from subjects’ observable behaviour and performance (implicit measures). Subjective ratings can also involve little or no disruption of the task (they can be obtained by an observer, or given by the subject post-task). At the opposite end of the scale, “probe” techniques which provide explicit measures of SA may require the task to be paused or frozen and all information displays to be blanked or hidden from view whilst subjects respond to a set of queries about the situation. In addition, some techniques focus directly on the quality of SA as a “product” (i.e., on the accuracy and completeness of what is known and understood about the situation), while others focus on the processes by which SA is achieved and maintained, such as how team-members exchange information. There is no single “optimum” method: each has its pros and cons and the user must decide what best fits the particular requirements and constraints of the given exercise or experimental setup. The general recommendation for measuring SA is that, when possible, several measures of SA should be utilized to ensure concurrent validity (Harwood et. al, 1988) and to provide a balanced, informative assessment.

Controversies[]

Situation awareness (SA) is a human factors term which has become something of a buzzword. Being a buzzword the term 'situation awareness' is tautologically unfortunate as there is more to situation awareness than simply being 'aware of your situation'. Despite its popularity and ubiquity there is a lot of debate within the scientific literature about what SA is, how it works and whether we need such a concept at all.

Information and Knowledge[]

SA relies on information in the context (an objective state of the world) and a state of knowledge built on that information (a subjective state). Good SA, therefore, does not guarantee good performance, neither does poor SA preclude it. Knowledge in the head (as opposed to information in the world) can be defined as a “body of information possessed by a person” yet it is also “more than a simple compendium of dispositions to respond or a collection of conditioned responses” (Reber, 1995, p. 401). From an information processing viewpoint, knowledge appears to fall along a continuum. Knowledge can be a discrete instance of perceived information, akin to Gibson’s (1979) ideas of direct perception and, therefore, would have a direct analogue to an objectively manifest physical stimuli. Knowledge in this case is rather like holding a mirror up to reality. At the other end of the continuum, knowledge can be an entity that arises, or emerges, from the complex interplay of various mental processes, for which a publicly observable physical stimuli need not be present or else bears little structural resemblance to it. In other words, knowledge can be created by the simple perception of elements in the environment (what Endsley would call Level 1 SA) as well as comprehending what those elements mean (Level 2 SA). Level 3 SA is about predicting what is going to happen in the immediate future. Arguably, all perceptual experience is hypothetical, thus a person develops a mental theory of the world that helps them to test it and explain it, modifying the theory as time and situations require. In the language of Endsley's theory this is Level 3 SA.

It is tempting to see this three level model of SA as a sequential model but clearly it is not. For example, people can can have Level 2 and 3 SA without perceiving anything at all. Consider the case of driving along a familiar route. The driver 'expects' to perceive certain features and will be 'projecting' future states (Level 3 SA) even though that feature hasn't actually been enountered yet. Let us also assume that this driver is highly expert. It is conceivable that Level 1 SA could lead directly to Level 3 SA; no explicit 'comprehension' phase (Level 2 SA) is required. Furthermore, whilst it is tempting to see Endlsey's three level model as not just sequential but also single channel, it is unlikely that SA is built up 'element by element'. If SA is about creating a good, parsimonious 'situational theory' of the world in order to base decisions and actions upon, then there is no particular requirement for it to literally 'look like' the world to which it is mapped nor to be as complex. If anything, quite the reverse.

Process[]

The process of acquiring and maintaining SA, which Endsley (1995) refers to as 'Situation Assessment', subsumes a panoply of mental operations and structures. As such, some authors suggest that SA is not really a psychological construct at all (in the way that, for example, Short Term Memory is) and that SA “should be viewed as a label for a variety of cognitive processing activities that are critical to dynamic, event driven and multi-task fields of practice” (Sarter & Woods, 1995, p. 16; Patrick & James, 2004). Quite frequently the term mental model is used as a description either of what SA is (a state of knowledge) or how it is acquired (a process). Although appealing, the term mental model is itself highly contentious, not least because of the circularity of being able to replace SA altogether with some version of the mental model concept. A whole range of other rather general concepts like memory, attention, perceptual processes and so forth have been implicated in the acquisition and maintenance of SA but there is, theoretically at least, still no definite answer as to the 'mechanisms' or 'process' of SA.

What we can say is related to the rather more non-linear idea of constructivism. This is the idea that people play a large part in creating the situation from which they develop their awareness. As Smith & Hancock (1995) put it, SA can be viewed as “a generative process of knowledge creation” (p. 142) in which “[…] the environment informs the agent, modifying its knowledge. Knowledge directs the agent’s activity in the environment. That activity samples and perhaps anticipates or alters the environment, which in turn informs the agent” (Smith & Hancock, 1995, p. 142) and so on. SA can itself lead to behaviours that create a better situation to be aware of. This non-linear flavour to SA takes us away from the somewhat mechanistic notions of progressing sequentially through discrete stages of information processing. Functionally, this may ultimately be a more useful approach.

Product[]

SA can also be regarded as a product. That is, less concerned with the processes of SA acquisition, "how it works", and rather more to do with "what it is" and "what it does". This, according to Endsley (1995), is Situation Awareness proper. Both Endsley (1995) and Gugerty (1997) speak of ‘knowledge states’ as representative of the ‘product’ of SA. Someone with a particular state of knowledge will, therefore, have better or worse SA than someone with a different state of knowledge.

Knowledge states represent the way in which situations are experienced, a so-called ‘situation focus’. This is more “concerned with the mapping of the relevant information in the situation onto a mental representation of that information within the individual” (Rousseau, Tremblay & Breton, 2004, p. 5). This viewpoint conveys a rather normative flavour to much of the extant work on SA (e.g. SA measurement methods such as SAGAT; Endsley, 1988) that looks for discrepancies between the ‘situation’ and the person's ‘awareness’. It makes the tacit assumption that if the individual's state of knowledge is not a mirror image of the actual 'situation' then their SA is faulty. Despite the appeal of such a view it is conceivable that good ‘awareness’ can be achieved not just by correspondence between an objective state of the world and its mental analogue, but also by the following:

Parts and Relations. The mappings that exist between different perspectives on a situation; in other words, to see connections between situational elements in addition to the elements themselves (e.g. Flach, Mulder & Paassen, 2004). In other words, the sum of situational knowledge may not be equal to its parts.

Abstraction. The way in which information and ‘awareness’ can be chunked into new functional units (thereby forming entities at higher, more implicit levels of abstraction) in order to develop a ‘situational theory’ of the world (Gugerty, 1997; Banbury, Croft, Macken & Jones, 2004; Bryant, Lichacz, Hollands & Baranski, 2004; Chase & Simon, 1973; Gobet, 1998). The term situational theory is a general term that reflects the “hypothetical nature of perceptual experience” (Bryant et al., 2004, p.110). A mental theory reflects the fact that ‘what is in the persons head’ (so to speak) is, arguably, “a representation that mirrors, duplicates, imitates or in some way illustrates a pattern of relationships observed in data or in nature […]”, “a characterisation of a process […]” that is able to provide “explanations for all attendant facts” (Reber, 1995; p. 465, 793). In other words, the mental theory might not look anything like the actual situation, in fact research in the field of expertise would suggest that the more expert a person is in a particular situation the 'less' likely their situational or mental theory of the world is likely to look like it.

Parsimony. A good theory, and there is little reason to suppose that a persons situational theory is likely to be any different in this regard, is one that aims to reduce complexity; the better the theory or model is, the more parsimonious it is likely to be (e.g. Gobet, 1998; Chase & Simon, 1973). The principle of parsimony would actually require the 'products' of SA to be at a higher probably more implicit level of abstraction. Driving Without Attention Mode (DWAM; Kerr, 1991; May & Gale, 1998) seems to be a case in point. DWAM is an extreme example of ‘implicit SA’. It is an event that is characterised by a car driver not being able to recall how they arrived at a destination yet it is clearly the case that there must have been a functioning mental theory of the driving context, and concomitant SA, in order for them to have arrived safely at all (despite a lack of ability to explicitly describe ‘how’ or to map situational elements onto their knowledge, or apparent lack of knowledge of the situation). In other words, the best mental theories, those developed by experts at a particular task, are likely to be the ones that cannot be (easily) described or verbalised.

Summary[]

We have arrived at a place that is rather a long way away from simply 'being aware of your situation'. SA is a surprisingly complex and hotly contested subject but we can distill all of this to try and say some useful and relatively uncontensious things about what it is, what it does, how it works and why it is useful.

- The term SA "is a shorthand description for keeping track of what is going on around you in a complex, dynamic environment" (Moray, 2005, p. 4).

- The aim of SA "is to keep the [individual] tightly coupled to the dynamics of the environment" (Moray, 2005, p. 4)

- How does it work? A collection of psychological processes enable people to construct a situational theory in order to structure what they perceive, know and expect, to connect what they perceive, know and expect into meaningful relationships, enabling them to understand, in the most parsimonious way possible, what is happening and what is going to happen.

- Why is it useful? The dynamic situational model helps to guide decision making and action. SA is part of the process of supporting the creation and maintenance of that model.

References[]

- Adam, E.C. (1993). Fighter cockpits of the future. Proceedings of 12th DASC, the 1993 IEEE/AIAA Digital Avionics Systems Conference, 318-323.

- Artman, H. & Garbis, C. (1988). Situation awareness as distributed cognition. In: Proceedings of the European conference on cognitive ergonomics, cognition and co-operation. Limerick, Ireland, pp 151–156.

- Baber, C., Houghton, R. & Cowton, M. (2004). WESTT: Reconfigurable Human Factors Model for Network Enabled Capability. Paper presented at the RTO NMSG Symposium on “Modelling and Simulation to Address NATO’s New and Existing Military Requirements”, Koblenz, Germany, 7-8 October 2004; RTO-MP-MSG-028. http://ftp.rta.nato.int/public//PubFullText/RTO/MP/RTO-MP-MSG-028/MP-MSG-028-11.pdf

- Banbury, S. P., Croft, D. G., Macken, W. J. & Jones, D. M. (2004). A cognitive streaming account of situation awareness. In S. Banbury & S. Tremblay (Eds) A cognitive approach to situation awareness: theory and application. (Ashgate: Aldershot).

- Bell, H. H, and Lyon, D. R. 2000, Using observer ratings to assess situation awareness, In M. R. Endsley (Ed) Situation awareness analysis and measurement. (Mahwah, NJ: Lawrence Earlbaum Associates).

- Bryant, D. J., Lichacz, F. M. J., Hollands, J. G. & Baranski, J. V. (2004). Modeling situation awareness in an organisational context : Military command and control. In S. Banbury & S. Tremblay (Eds) A cognitive approach to situation awareness: theory and application. (Ashgate: Aldershot).

- Carretta, T.R., Perry, D.C. & Ree, M.J. (1996). Prediction of situational awareness in F-15 pilots. Int. J. Aviat. Psychol., 6(1), 21-41.

- Chase, W. G. & Simon, H. A.(1973) Perception in chess. Cognitive Psychology, 4 55-81.

- Cooper, J. (1995). Dominant battlespace awareness and future warfare. In S.E. Johnson & M.C. Libicki (Eds.), Dominant Battlespace Knowledge: The Winning Edge. Washington, DC: National Defense University.

- Dominguez, C., Vidulich, M., Vogel, E. & McMillan, G. (1994). Situation Awareness: Papers and Annotated Bibliography. Armstrong Laboratory, Human System Center, ref. AL/CF-TR-1994-0085.

- Endsley, M. R. (1988). Situation awareness global assessment technique (SAGAT). Proceedings of the National Aerospace and Electronics Conference (NAECON). (New York: IEEE), 789-795.

- Endsley, M. R. (1995a) Toward a theory of situation awareness in dynamic systems. Human Factors, 37(1), 32–64.

- Endsley, M.R. (1995b) Measurement of situation awareness in dynamic systems. Human Factors, 37(1), 65-84

- Endsley, M. R. (1998). A comparative analysis of SAGAT and SART for evaluations of situation awareness. In Proceedings of the Human Factors and Ergonomics Society 42nd Annual Meeting (pp. 82-86). Santa Monica, CA: The Human Factors and Ergonomics Society.

- Endsley, M. R. (2000). Theoretical underpinnings of situation awareness: A critical review. In M. R. Endsley & D. J. Garland (Eds.), Situation Awareness Analysis And Measurement. Mahwah, NJ: LEA

- Endsley, M.R., Holder, L.D., Leibrecht, B.C., Garland, D.C., Wampler, R. L., & Matthews, M.D. (2000) Modeling and measuring situation awareness in the infantry operational environment (1753). Alexandria, VA: Army Research Institute. http://www.satechnologies.com/Papers/pdf/InfantrySA.pdf

- Endsley, M. R., & Jones, W. M. (1997). Situation awareness, information dominance, and information warfare. (Tech Report 97-01). Belmont, MA: Endsley Consulting.

- Endsley, M.R., Selcon, S.J., Hardiman, T.D., & Croft, D.G. (1998) A comparative evaluation of SAGAT and SART for evaluations of situation awareness In Proceedings of the Human Factors and Ergonomics Society Annual Meeting (pp. 82-86). Santa Monica,CA: Human Factors and Ergonomics Society. http://www.satechnologies.com/Papers/pdf/HFES98-SAGATvSART.pdf

- Flach, J., Mulder, M. & Paassen, M. M. (2004). The concept of the situation in psychology. In S. Banbury & S. Tremblay (Eds) A cognitive approach to situation awareness: theory and application. (Ashgate: Aldershot).

- Foy, L. & McGuinness, B. (2000). Implications of cockpit automation for crew situational awareness. Proceedings of the Conference on Human Performance, Situation Awareness and Automation: User-Centered Design for the New Millennium, pp. 101-106.

- Fracker, M. (1991). Measures of Situation Awareness: Review and Future Directions (Rep. No.AL-TR-1991-0128). Wright Patterson Air Force Base, Ohio: Armstrong Laboratories.

- French, H.T., Clark, E., Pomeroy, D. Seymour, M. , & Clarke, C.R. (2007). Psycho-physiological Measures of Situation Awareness. In M. Cook, J. Noyes & Y. Masakowski (eds.), Decision Making in Complex Environments. London: Ashgate. ISBN: 0 7546 4950 4.

- Garstka, J. and Alberts, D. (2004). Network Centric Operations Conceptual Framework Version 2.0, U.S. Office of Force Transformation and Office of the Assistant Secretary of Defense for Networks and Information Integration.

- Gibson, J. J. (1979). The ecological approach to visual perception. Houghton-Mifflin: Boston.

- Gobet, F. (1998). Expert memory: a comparison of four theories. Cognition, 66, 115-152.

- Gugerty, L. J. (1997). Situation awareness during driving: explicit and implicit knowledge in dynamic spatial memory. Journal of Experimental Psychology: Applied, 3,(1), 42-66.

- Harwood, K., Barnett, B., & Wickens, C.D. (1988). Situational awareness: A conceptual and methodological framework. In F.E. McIntire (Ed.), Proceedings of the 11th Biennial Psychology in the Department of Defense Symposium (pp. 23-27). Colorado Springs, CO: U.S. Air Force Academy.

- Jeannot, E., Kelly, C. and Thompson, D.; (2003). The Development of Situation Awareness Measures in ATM Systems. Brussels: Eurocontrol.

- Jones, D.G., & Endsley, M.R. (2000) Examining the validity of real-time probes as a metric of situation awareness. In Proceedings of the 14th Triennial Congress of the International Ergonomics Association and the 44th Annual Meeting of the Human Factors and Ergonomics Society. Santa Monica, CA: HFES. http://www.satechnologies.com/Papers/pdf/HFES2000-probes.pdf

- Kerr, J. S. (1991). Driving without attention mode (DWAM): A normalisation of inattentive states in driving. In Vision in Vehicles III. North Holland: Elsevier.

- Marsh, H.S. (2000). Beyond Situational Awareness: The Battlespace of the Future. Washington,D.C.: Office of Naval Research, 20 March, 2000.

- May, J. L., & Gale, A. G. (1998). How did I get here? – driving without attention mode. In M. Hanson (ed), Contemporary Ergonomics 1998. London: Taylor and Francis.

- McGuinness, B. (2004). Quantitative analysis of situational awareness (QUASA): Applying signal detection theory to true/false probes and self ratings. In Proceedings of the 9th International Command and Control Research and Technology Symposium (ICCRTS), Copenhagen, Sept. 2004.

- Metalis, S.A. (1993). Assessment of pilot situational awareness: Measurement via simulation. In Proceedings of the Human Factors Society 37th Annual Meeting (pp. 113-117). Santa Monica, CA: The Human Factors and Ergonomics Society.

- Moray, N. (2004). Ou sont les neiges d'antan? In D. A. Vincenzi, M. Mouloua & P. A. Hancock (Eds), Human Performance, Situation Awareness and Automation: Current Research and Trends. Mahwah: LEA.

- Rousseau, R., Tremblay, S. & Breton, R. (2004). Defining and modeling situation awareness : A critical review. In S. Banbury & S. Tremblay (Eds) A cognitive approach to situation awareness: theory and application. Ashgate: Aldershot

- Salmon, P.M., Stanton, N. A., Walker, G., & Green, D. (2006). Situation Awareness measurement: A review of applicability for C4i environments. Applied Ergonomics, 37, 225-238.

- Sarter, N. B. & Woods, D. D. (1991). How in the world did I ever get into that mode: Mode error and awareness in supervisory control. Human Factors, 37(1), 5-19.

- Shu, Y. & Futura, K. (2005). An inference method of team situation awareness based on mutual awareness. Cognition, Technology & Work, 7: 272–287.

- Smith, K., & Hancock, P. A., (1995). Situation awareness is adaptive, externally directed consciousness. Human Factors, 37, (1), 137-148.

- Stanton, N. A., Salmon, P., Walker, G. H., Baber, C. & Jenkins, D. (2005). Human Factors Methods: A Practical Guide for Engineering and Design. Aldershot: Ashgate.

- Stanton, N. A., Chambers, P. R. G. & Piggott, J. (2001). Situational awareness and safety. Safety Science.

- Sukthankar, R. (1997). Situational awareness for tactical driving. Unpublished doctoral dissertation, Carnegie Mellon University, Pittsburgh.

- Taylor, R.M. (1989). Situational awareness rating technique (SART): The development of a tool for aircrew systems design. In Proceedings of the AGARD AMP Symposium on Situational Awareness in Aerospace Operations, CP478. Seuilly-sur Seine: NATO AGARD.

- Uhlarik, J, & Comerford, D.D. (2002). A Review of Situation Awareness Literature Relevant to Pilot Surveillance Functions. FAA Office of Aerospace Medicine, report no. DOT/FAA/AM-02/3, U.S. Department of Transport. http://amelia.db.erau.edu/reports/faa/am/AM02-03.pdf

- Walker, G. H., Stanton, N. A., & Young, M. S. (2006). The ironies of vehicle feedback in car design. Ergonomics, 49(2), 161-179.

- Watts, B.D. (2004). "Situation awareness" in air-to-air combat and friction. Chapter 9 in Clausewitzian Friction and Future War, McNair Paper no. 68 (revised edition; originally published in 1996 as McNair Paper no. 52). Institute of National Strategic Studies, National Defense University

- de:Situationsbewusstsein

| This page uses Creative Commons Licensed content from Wikipedia (view authors). |