Assessment |

Biopsychology |

Comparative |

Cognitive |

Developmental |

Language |

Individual differences |

Personality |

Philosophy |

Social |

Methods |

Statistics |

Clinical |

Educational |

Industrial |

Professional items |

World psychology |

Philosophy Index: Aesthetics · Epistemology · Ethics · Logic · Metaphysics · Consciousness · Philosophy of Language · Philosophy of Mind · Philosophy of Science · Social and Political philosophy · Philosophies · Philosophers · List of lists

In thermodynamics, entropy, symbolized by S, is a state function of a thermodynamic system defined by the differential quantity , where dQ is the amount of heat absorbed in a reversible process in which the system goes from one state to another, and T is the absolute temperature.[1] Entropy is one of the factors that determines the free energy in the system and appears in the second law of thermodynamics. In a simplified approach for teaching purposes, entropy is described as measuring the spontaneous dispersal of energy at a specific temperature, although this dispersal is not necessarily spatial.[2]

In terms of statistical mechanics, the entropy describes the number of the possible microscopic configurations of the system. The statistical definition of entropy is generally thought to be the more fundamental definition, from which all other important properties of entropy follow. Although the concept of entropy was originally a thermodynamic construct, it has been adapted in other fields of study, including information theory, psychodynamics, and thermoeconomics.

"Ice melting" - a classic example of entropy increasing

Overview[]

In a thermodynamic system, a "universe" consisting of "surroundings" and "systems" and made up of quantities of matter, its pressure differences, density differences, and temperature differences all tend to equalize over time. As shown in the ice melting example below discussing the illustration involving a warm room (surroundings) and cold glass of ice and water (system), the difference in temperature begins to be equalized as portions of the heat energy from the warm surroundings become spread out to the cooler system of ice and water. Over time the temperature of the glass and its contents becomes equal to that of the room. The entropy of the room has decreased and some of its energy has been dispersed to the ice and water. However, as calculated in the example, the entropy of the system of ice and water has increased more than the entropy of the surrounding room decreased. This is always true, the dispersal of energy from warmer to cooler always results in an increase in entropy. (Although the converse is not necessarily true, for example in the entropy of mixing. Thus, when the 'universe' of the room surroundings and ice and water system has reached an equilibrium of equal temperature, the entropy change from the initial state is at a maximum. The entropy of the thermodynamic system is a measure of how far the equalization has progressed.

The entropy of a thermodynamic system can be interpreted in two distinct, but compatible, ways:

- From a macroscopic perspective, in classical thermodynamics the entropy is interpreted simply as a state function of a thermodynamic system: that is, a property depending only on the current state of the system, independent of how that state came to be achieved. The state function has the important property that, when multiplied by a reference temperature, it can be understood as a measure of the amount of energy in a physical system that cannot be used to do thermodynamic work; i.e., work mediated by thermal energy. More precisely, in any process where the system gives up energy ΔE, and its entropy falls by ΔS, a quantity at least TR ΔS of that energy must be given up to the system's surroundings as unusable heat (TR is the temperature of the system's external surroundings). Otherwise the process will not go forward.

- From a microscopic perspective, in statistical thermodynamics the entropy is a measure of the number of microscopic configurations that are capable of yielding the observed macroscopic description of the thermodynamic system:

where Ω is the number of microscopic configurations, and is Boltzmann's constant. It can be shown that this definition of entropy, sometimes referred to as Boltzmann's postulate, reproduces all of the properties of the entropy of classical thermodynamics. citation needed

An important law of physics, the second law of thermodynamics, states that the total entropy of any isolated thermodynamic system tends to increase over time, approaching a maximum value. Unlike almost all other laws of physics, this associates thermodynamics with a definite arrow of time. However, for a universe of infinite size, which cannot be regarded as an isolated system, the second law does not apply.

History[]

- Main article: History of entropy

The short history of entropy begins with the work of mathematician Lazare Carnot who in his 1803 work Fundamental Principles of Equilibrium and Movement postulated that in any machine the accelerations and shocks of the moving parts all represent losses of moment of activity. In other words, in any natural process there exists an inherent tendency towards the dissipation of useful energy. Building on this work, in 1824 Lazare's son Sadi Carnot published Reflections on the Motive Power of Fire in which he set forth the view that in all heat-engines "caloric", or what is now known as heat, moves from hot to cold and that "some caloric is always lost". This lost caloric was a precursory form of entropy loss as we now know it. Though formulated in terms of caloric, rather than entropy, this was an early insight into the second law of thermodynamics. In the 1850s, Rudolf Clausius began to give this "lost caloric" a mathematical interpretation by questioning the nature of the inherent loss of heat when work is done, e.g. heat produced by friction.[3] In 1865, Clausius gave this heat loss a name:[4]

| “ | I propose to name the quantity S the entropy of the system, after the Greek word [τροπη trope], the transformation. I have deliberately chosen the word entropy to be as similar as possible to the word energy: the two quantities to be named by these words are so closely related in physical significance that a certain similarity in their names appears to be appropriate. | ” |

Later, scientists such as Ludwig Boltzmann, Willard Gibbs, and James Clerk Maxwell gave entropy a statistical basis. Carathéodory linked entropy with a mathematical definition of irreversiblity, in terms of trajectories and integrability.

Thermodynamic definition[]

- Main article: Entropy (thermodynamic views)

In the early 1850s, Rudolf Clausius began to put the concept of "energy turned to waste" on a differential footing. Essentially, he set forth the concept of the thermodynamic system and positioned the argument that in any irreversible process a small amount of heat energy dQ is incrementally dissipated across the system boundary.

Specifically, in 1850 Clausius published his first memoir in which he presented a verbal argument as to why Carnot’s theorem, proposing the equivalence of heat and work, i.e. Q = W, was not perfectly correct and as such it would need amendment. In 1854, Clausius states: "In my memoir 'On the Moving Force of Heat, &c.', I have shown that the theorem of the equivalence of heat and work, and Carnot’s theorem, are not mutually exclusive, by that, by a small modification of the latter, which does not affect its principle, they can be brought into accordance." This small modification on the latter is what developed into the second law of thermodynamics.

1854 definition[]

In his 1854 memoir, Clausius first develops the concepts of interior work, i.e. "those which the atoms of the body exert upon each other", and exterior work, i.e. "those which arise from foreign influences which the body may be exposed", which may act on a working body of fluid or gas, typically functioning to work a piston. He then discusses the three types of heat by which Q may be divided:

- Heat employed in increasing the heat actually existing in the body.

- Heat employed in producing the interior work.

- Heat employed in producing the exterior work.

Building on this logic, and following a mathematical presentation of the first fundamental theorem, Clausius then presents us with the first-ever mathematical formulation of entropy, although at this point in the development of his theories calls it "equivalence-value", most likely so to have relation to the mechanical equivalent of heat which was developing at the time. He states:[5]

the second fundamental theorem in the mechanical theory of heat may thus be enunciated:

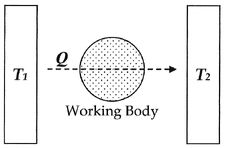

If two transformations which, without necessitating any other permanent change, can mutually replace one another, be called equivalent, then the generations of the quantity of heat Q from work at the temperature t , has the equivalence-value:

and the passage of the quantity of heat Q from the temperature T1 to the temperature T2, has the equivalence-value:

wherein T is a function of the temperature, independent of the nature of the process by which the transformation is effected.

This is the first-ever mathematical formulation of entropy; at this point, however, Clausius had not yet affixed the concept with the label entropy as we currently know it; this would come in the following several years.[6] In modern terminology, we think of this equivalence-value as "entropy", symbolized by S. Thus, using the above description, we can calculate the entropy change ΔS for the passage of the quantity of heat Q from the temperature T1, through the "working body" of fluid (see heat engine), which was typically a body of steam, to the temperature T2 as shown below:

If we make the assignment:

Then, the entropy change or "equivalence-value" for this transformation is:

which equals:

and by factoring out Q, we have the following form, as was derived by Clausius:

Later development[]

In 1876, chemical engineer Willard Gibbs, building on the work of those as Clausius and Hermann von Helmholtz, situated the view that the measurement of "available energy" ΔG in a thermodynamic system could be mathematically accounted for by subtracting the "energy loss" TΔS from total energy change of the system ΔH. These concepts were further developed by James Clerk Maxwell [1871] and Max Planck [1903].

| Conjugate variables of thermodynamics | |

|---|---|

| Pressure | Volume |

| (Stress) | (Strain) |

| Temperature | Entropy |

| Chem. potential | Particle no. |

Entropy is said to be thermodynamically conjugate to temperature.

There is an important connection between entropy and the amount of internal energy in the system which is not available to perform work. In any process where the system gives up an energy ΔE, and its entropy falls by ΔS, a quantity at least TR ΔS of that energy must be given up to the system's surroundings as unusable heat. Otherwise the process will not go forward. (TR is the temperature of the system's external surroundings, which may not be the same as the system's current temperature T).

Units[]

The SI unit of entropy is "joule per kelvin" (J·K−1), which is the same as the unit of heat capacity.

Statistical interpretation[]

- Main article: Entropy (statistical views)

In 1877, thermodynamicist Ludwig Boltzmann visualized a probabilistic way to measure the entropy of an ensemble of ideal gas particles, in which he defined entropy to be proportional to the logarithm of the number of microstates such a gas could occupy. Henceforth, the essential problem in statistical thermodynamics, i.e. according to Erwin Schrödinger, has been to determine the distribution of a given amount of energy E over N identical systems.

Statistical mechanics explains entropy as the amount of uncertainty (or "mixedupness" in the phrase of Gibbs) which remains about a system, after its observable macroscopic properties have been taken into account. For a given set of macroscopic quantities, like temperature and volume, the entropy measures the degree to which the probability of the system is spread out over different possible quantum states. The more states available to the system with higher probability, and thus the greater the entropy. In essence, the most general interpretation of entropy is as a measure of our ignorance about a system. The equilibrium state of a system maximizes the entropy because we have lost all information about the initial conditions except for the conserved quantities; maximizing the entropy maximizes our ignorance about the details of the system.[7]

On the molecular scale, the two definitions match up because adding heat to a system, which increases its classical thermodynamic entropy, also increases the system's thermal fluctuations, so giving an increased lack of information about the exact microscopic state of the system, i.e. an increased statistical mechanical entropy.

Qualitatively, entropy is often associated with the amount of seeming disorder in the system. For example, solids (which are typically ordered on the molecular scale) usually have smaller entropy than liquids, and liquids smaller entropy than gases. This happens because the number of different microscopic states available to an ordered system is usually much smaller than the number of states available to a system that appears to be disordered given the human desire for symmetry.

Entropy increase as energy dispersal[]

The description of entropy as amounts of "mixedupness" or "disorder" and the abstract nature of statistical mechanics can lead to confusion and considerable difficulty for students beginning the subject.[8][9] An approach to instruction emphasising the qualitative simplicity of entropy has been developed.[10] In this approach, entropy increase is described the spontaneous dispersal of energy: how much energy is spread out in a process or how widely spread out it becomes at a specific temperature.[2] A cup of hot coffee in a room will eventually cool, and the room will become a bit warmer. The higher amount of heat energy in the hot coffee has been dispersed to the entire room, and the net entropy of the system (coffee and room) will increase as a result.

In this approach the statistical interpretation is related to quantum mechanics, and the generalization is made for molecular thermodynamics that "Entropy measures the energy dispersal for a system by the number of accessible microstates, the number of arrangements (each containing the total system energy) for which the molecules' quantized energy can be distributed, and in one of which – at a given instant – the system exists prior to changing to another.[11] On this basis the claim is made that in all everyday spontaneous physical happenings and chemical reactions, some type of energy flows from being localized or concentrated to becoming spread out — often to a larger space, always to a state with a greater number of microstates.[10] Thus in situations such as the entropy of mixing it is not strictly correct to visualise a spatial dispersion of energy; rather the molecules' motional energy of each constituent is actually spread out in the sense of having the chance of being, at one instant, in any one of many many more microstates in the larger mixture than each had before the process of mixing.[11]

The subject remains subtle and difficult, and in complex cases the qualitative relation of energy dispersal to entropy change can be so inextricably obscured that it is moot. However, it is claimed that this does not weaken its explanatory power for beginning students.[10] In all cases however, the statistical interpretation will hold - entropy increases as the system moves from a macrostate which is very unlikely (for a system in equilibrium), to a macrostate which is much more probable.

Information theory[]

- Main article: Information entropy

The concept of entropy in information theory describes how much randomness (or, alternatively, "uncertainty") there is in a signal or random event. An alternative way to look at this is to talk about how much information is carried by the signal.

The entropy in statistical mechanics can be considered to be a specific application of Shannon entropy, according to a viewpoint known as MaxEnt thermodynamics. Roughly speaking, Shannon entropy is proportional to the minimum number of yes/no questions you have to ask to get the answer to some question. The statistical mechanical entropy is then proportional to the minimum number of yes/no questions you have to ask in order to determine the microstate, given that you know the macrostate.

The second law[]

- Main article: Second law of thermodynamics

An important law of physics, the second law of thermodynamics, states that the total entropy of any isolated thermodynamic system tends to increase over time, approaching a maximum value; and so, by implication, the entropy of the universe (i.e. the system and its surroundings), assumed as an isolated system, tends to increase. Two important consequences are that heat cannot of itself pass from a colder to a hotter body: i.e., it is impossible to transfer heat from a cold to a hot reservoir without at the same time converting a certain amount of work to heat. It is also impossible for any device that operates on a cycle to receive heat from a single reservoir and produce a net amount of work; it can only get useful work out of the heat if heat is at the same time transferred from a hot to a cold reservoir. This means that there is no possibility of a "perpetual motion" which is isolated. Also, from this it follows that a reduction in the increase of entropy in a specified process, such as a chemical reaction, means that it is energetically more efficient.

The arrow of time[]

- Main article: Entropy (arrow of time)

Entropy is the only quantity in the physical sciences that "picks" a particular direction for time, sometimes called an arrow of time. As we go "forward" in time, the Second Law of Thermodynamics tells us that the entropy of an isolated system can only increase or remain the same; it cannot decrease. Hence, from one perspective, entropy measurement is thought of as a kind of clock.

Entropy and cosmology[]

We have previously mentioned that a finite universe may be considered an isolated system. As such, it may be subject to the Second Law of Thermodynamics, so that its total entropy is constantly increasing. It has been speculated that the universe is fated to a heat death in which all the energy ends up as a homogeneous distribution of thermal energy, so that no more work can be extracted from any source.

If the universe can be considered to have generally increasing entropy, then - as Roger Penrose has pointed out - an important role in the increase is played by gravity, which causes dispersed matter to accumulate into stars, which collapse eventually into black holes. Jacob Bekenstein and Stephen Hawking have shown that black holes have the maximum possible entropy of any object of equal size. This makes them likely end points of all entropy-increasing processes, if they are totally effective matter and energy traps. Hawking has, however, recently changed his stance on this aspect.

The role of entropy in cosmology remains a controversial subject. Recent work has cast extensive doubt on the heat death hypothesis and the applicability of any simple thermodynamic model to the universe in general. Although entropy does increase in the model of an expanding universe, the maximum possible entropy rises much more rapidly and leads to an "entropy gap", thus pushing the system further away from equilibrium with each time increment. Other complicating factors, such as the energy density of the vacuum and macroscopic quantum effects, are difficult to reconcile with thermodynamical models, making any predictions of large-scale thermodynamics extremely difficult.

Generalized Entropy[]

Many generalizations of entropy have been studied, two of which, Tsallis and Rényi entropies, are widely used and the focus of active research.

The Rényi entropy is an information measure for fractal systems.

- .

where α > 0 is the 'order' of the entropy, pi are the probabilities of {x1, x2 ... xn}. For α = 1 we recover the standard entropy form.

The Tsallis entropy is employed in Tsallis statistics to study nonextensive thermodynamics.

where p denotes the probability distribution of interest, and q is a real parameter that measures the non-extensitivity of the system of interest. In the limit as q → 1, we again recover the standard entropy.

Ice melting example[]

The illustration for this article is a classic example in which entropy increases in a small 'universe', a thermodynamic system consisting of the 'surroundings' (the warm room) and 'system' (glass, ice, cold water). In this universe, some heat energy dQ from the warmer room surroundings (at 298 K or 25 C) will spread out to the cooler system of ice and water at its constant temperature T of 273 K (0 C), the melting temperature of ice. Thus, the entropy of the system, which is dQ/T, increases by dQ/273 K. (The heat dQ for this process is the energy required to change water from the solid state to the liquid state, and is called the enthalpy of fusion, i.e. the ΔH for ice fusion.)

It is important to realize that the entropy of the surrounding room decreases less than the entropy of the ice and water increases: the room temperature of 298 K is larger than 273 K and therefore the ratio, (entropy change), of dQ/298 K for the surroundings is smaller than the ratio (entropy change), of dQ/273 K for the ice+water system. This is always true in spontaneous events in a thermodynamic system and it shows the predictive importance of entropy: the final net entropy after such an event is always greater than was the initial entropy.

As the temperature of the cool water rises to that of the room and the room further cools imperceptibly, the sum of the dQ/T over the continuous range, at many increments, in the initially cool to finally warm water can be found by calculus. The entire miniature "universe", i.e. this thermodynamic system, has increased in entropy. Energy has spontaneously become more dispersed and spread out in that "universe" than when the glass of ice water was introduced and became a "system" within it.

Psychological entropy[]

Psychological entropy is based on the idea that humans seek to manage their local entropy levels (e.g. not too high). If the brain estimates entropy levels are too high, it can cause stress, anxiety, etc.[12]

Entropy in fiction[]

- Isaac Asimov's "The Last Question", a short science fiction story about entropy

- Thomas Pynchon, an American author who deals with entropy in many of his novels

- Diane Duane's Young Wizards series, in which the protagonists' ultimate goal is to slow down entropy and delay heat death.

- Gravity Dreams by L.E. Modesitt Jr.

- The Planescape setting for Dungeons & Dragons includes the Doomguard faction, who worship entropy.

- Arcadia, a play by Tom Stoppard, explores entropy, the arrow of time, and heat death.

- Stargate SG-1 and Atlantis, science-fiction television shows where a ZPM (Zero Point Module) is depleted when it reaches maximum entropy

- In DC Comics's series Zero Hour, entropy plays a central role in the continuity of the universe.

- In a post-crisis issue of Superman, in which Doomsday is brought to the end of time and entropy is discussed.

- H.G. Wells' novel The Time Machine had a theme that was based upon entropy and the idea of humans evolving into two species, each of which is degenerate in its own way. Such a process is often incorrectly termed devolution.

- "Logopolis", an episode of Doctor Who

- Asemic Magazine is an Australian publication that is exploring entropy in literature.

- Philip K. Dick's UBIK a science fiction novel with entropy as underlying theme.

- Peter F. Hamilton's The Night's Dawn Trilogy, is a trilogy with entropy as an underlying theme.

- In Savage: The Battle for Newerth, the Beast Horde class can build Entropy Shrines and Spires.

- Jasper Fforde's Thursday Next series – specifically Lost in a Good Book. Entropy being the force of nature that causes the most unlikely things happen, Thursday Next used an entroposcope to enter the world of fiction.

See also[]

|

|

References[]

- ↑ Perrot, Pierre (1998). A to Z of Thermodynamics, Oxford University Press. ISBN 0-19-856552-6.

- ↑ 2.0 2.1 Frank L. Lambert, A Student’s Approach to the Second Law and Entropy

- ↑ Clausius, Ruldolf (1850). On the Motive Power of Heat, and on the Laws which can be deduced from it for the Theory of Heat, Poggendorff's Annalen der Physick, LXXIX (Dover Reprint). ISBN 0-486-59065-8.

- ↑ Laidler, Keith J. (1995). The Physical World of Chemistry, Oxford University Press. ISBN 0-19-855919-4.

- ↑ Published in Poggendoff’s Annalen, Dec. 1854, vol. xciii. p. 481; translated in the Journal de Mathematiques, vol. xx. Paris, 1855, and in the Philosophical Magazine, August 1856, s. 4. vol. xii, p. 81

- ↑ Mechanical Theory of Heat, by Rudolf Clausius, 1850-1865

- ↑ EntropyOrderParametersComplexity.pdf

- ↑ Frank L. Lambert, Disorder — A Cracked Crutch For Supporting Entropy Discussions

- ↑ Frank L. Lambert, The Second Law of Thermodynamics (6)

- ↑ 10.0 10.1 10.2 Frank L. Lambert, Entropy Is Simple, Qualitatively

- ↑ 11.0 11.1 Frank L. Lambert, Entropy and the second law of thermodynamics

- ↑ Hirsh, J. H., Peterson, J. B., & Mar, R. A. (2012, December). Psychological Entropy: A Framework for Understanding Uncertainty-Related Anxiety. ResearchGate. https://www.researchgate.net/publication/221752816_Psychological_Entropy_A_Framework_for_Understanding_Uncertainty-Related_Anxiety

Further reading[]

- Fermi, Enrico (1937). Thermodynamics, Prentice Hall. ISBN 0-486-60361-X.

- Kroemer, Herbert; Charles Kittel (1980). Thermal Physics, 2nd Ed., W. H. Freeman Company. ISBN 0-7167-1088-9.

- Penrose, Roger (2005). The Road to Reality : A Complete Guide to the Laws of the Universe. ISBN 0-679-45443-8.

- Reif, F. (1965). Fundamentals of statistical and thermal physics, McGraw-Hill. ISBN 0-07-051800-9.

- Goldstein, Martin; Inge, F (1993). The Refrigerator and the Universe, Harvard University Press. ISBN 0-674-75325-9.

External links[]

- Mechanical Theory of Heat – Nine Memoirs on the development of concept of "Entropy" by Rudolf Clausius [1850-1865]

- Max Jammer (1973). Dictionary of the History of Ideas: Entropy

- Frank L. Lambert, Disorder - A Cracked Crutch For Supporting Entropy Discussions, Journal of Chemical Education 79 187-192 (2002).

- Frank L. Lambert, A Student’s Approach to the Second Law and Entropy — Thorough presentation aimed at chemistry students of entropy from the viewpoint of dispersal of energy, part of an extensive series of websites by the same author.

- [1] Metabolic Metaphysics_Entropy – Entropy as the catabolic leg of nature's generalized metabolism

ar:إنتروبية bs:Entropija ca:Entropia cs:Entropie da:Entropi de:Entropie el:Εντροπία es:Entropía (termodinámica) eo:Entropio fa:انتروپی fr:Entropie gl:Entropía ko:엔트로피 hr:Entropija ia:Entropia he:אנטרופיה lv:Entropija nl:Entropie no:Entropi nn:Entropi pt:Entropia ru:Термодинамическая энтропия sl:Entropija sr:Ентропија fi:Entropia sv:Entropi vi:Entropy uk:Термодинамічна ентропія zh:熵 (熱力學)

| This page uses Creative Commons Licensed content from Wikipedia (view authors). |